Multiple Regression- Ps: Sleep in mammals | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| *The author of this computation has been verified* | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| R Software Module: /rwasp_multipleregression.wasp (opens new window with default values) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Title produced by software: Multiple Regression | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Date of computation: Tue, 14 Dec 2010 09:50:13 +0000 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Cite this page as follows: | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Statistical Computations at FreeStatistics.org, Office for Research Development and Education, URL http://www.freestatistics.org/blog/date/2010/Dec/14/t129232024680b1pjqfhaxggsd.htm/, Retrieved Tue, 14 Dec 2010 10:51:03 +0100 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| BibTeX entries for LaTeX users: | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

@Manual{KEY,

author = {{YOUR NAME}},

publisher = {Office for Research Development and Education},

title = {Statistical Computations at FreeStatistics.org, URL http://www.freestatistics.org/blog/date/2010/Dec/14/t129232024680b1pjqfhaxggsd.htm/},

year = {2010},

}

@Manual{R,

title = {R: A Language and Environment for Statistical Computing},

author = {{R Development Core Team}},

organization = {R Foundation for Statistical Computing},

address = {Vienna, Austria},

year = {2010},

note = {{ISBN} 3-900051-07-0},

url = {http://www.R-project.org},

}

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Original text written by user: | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| IsPrivate? | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| No (this computation is public) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| User-defined keywords: | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Dataseries X: | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| » Textbox « » Textfile « » CSV « | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 6.3 2 4.5 1 6.6 42 3 1 3 2.1 1.8 69 2547 4603 624 3 5 4 9.1 0.7 27 10.55 179.5 180 4 4 4 15.8 3.9 19 0.023 0.3 35 1 1 1 5.2 1 30.4 160 169 392 4 5 4 10.9 3.6 28 3.3 25.6 63 1 2 1 8.3 1.4 50 52.16 440 230 1 1 1 11 1.5 7 0.42 6.4 112 5 4 4 3.2 0.7 30 465 423 281 5 5 5 6.3 2.1 3.5 0.075 1.2 42 1 1 1 6.6 4.1 6 0.785 3.5 42 2 2 2 9.5 1.2 10.4 0.2 5 120 2 2 2 3.3 0.5 20 27.66 115 148 5 5 5 11 3.4 3.9 0.12 1 16 3 1 2 4.7 1.5 41 85 325 310 1 3 1 10.4 3.4 9 0.101 4 28 5 1 3 7.4 0.8 7.6 1.04 5.5 68 5 3 4 2.1 0.8 46 521 655 336 5 5 5 17.9 2 24 0.1 0.25 50 1 1 1 6.1 1.9 100 62 1320 267 1 1 1 11.9 1.3 3.2 0.023 0.4 19 4 1 3 13.8 5.6 5 1.7 6.3 12 2 1 1 14.3 14.3 6.5 3.5 10.8 120 2 1 1 15.2 1.8 12 0.48 15.5 140 2 2 2 10 0.9 20.2 10 115 170 4 4 4 11.9 1.8 13 1.62 11.4 17 2 1 2 6.5 1.9 27 192 180 115 4 4 4 7.5 0.9 18 2.5 12.1 31 5 5 5 10.6 2.6 4.7 0.28 1.9 21 3 1 3 7.4 2.4 9.8 4.235 50.4 52 1 1 1 8.4 1.2 29 6.8 179 164 2 3 2 5.7 0.9 7 0.75 12.3 225 2 2 2 4.9 0.5 6 3.6 21 225 3 2 3 3.2 0.6 20 5 etc... | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Output produced by software: | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Enter (or paste) a matrix (table) containing all data (time) series. Every column represents a different variable and must be delimited by a space or Tab. Every row represents a period in time (or category) and must be delimited by hard returns. The easiest way to enter data is to copy and paste a block of spreadsheet cells. Please, do not use commas or spaces to seperate groups of digits!

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

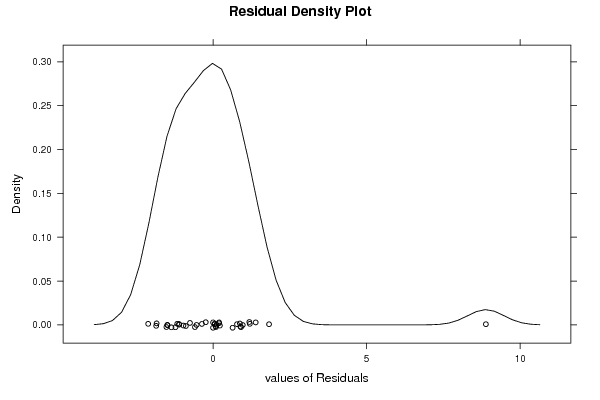

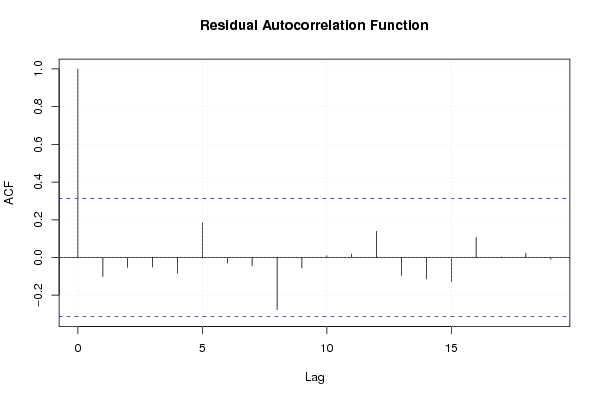

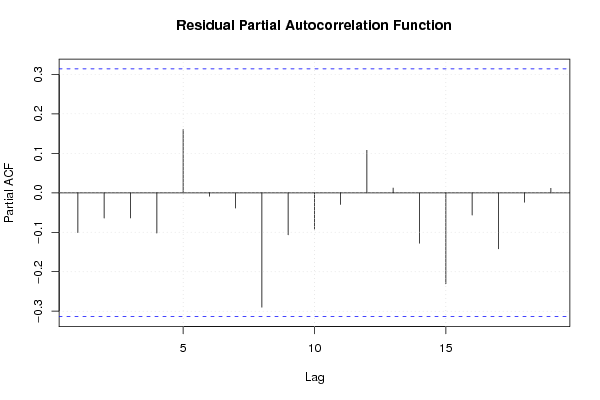

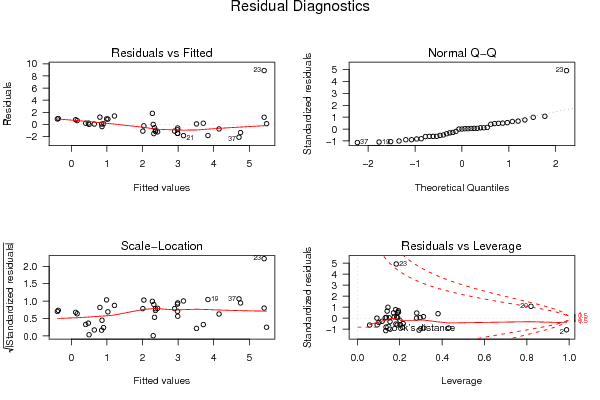

| Charts produced by software: | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Parameters (Session): | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| par1 = 1 ; par2 = Do not include Seasonal Dummies ; par3 = No Linear Trend ; | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Parameters (R input): | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| par1 = 2 ; par2 = Do not include Seasonal Dummies ; par3 = No Linear Trend ; | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| R code (references can be found in the software module): | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| library(lattice)

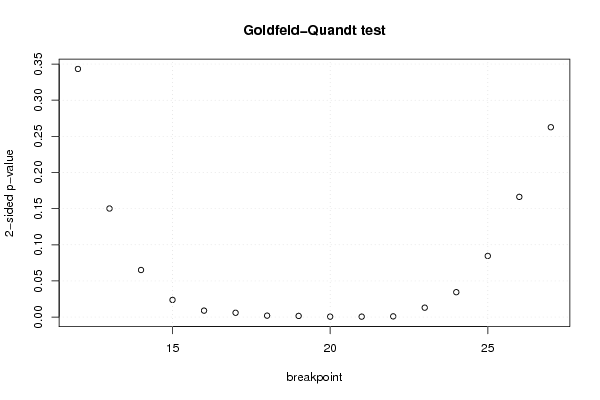

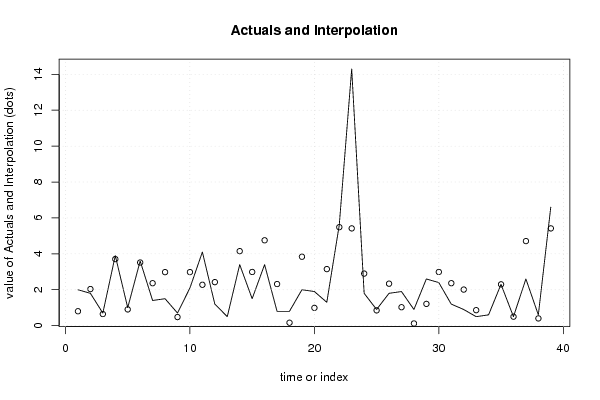

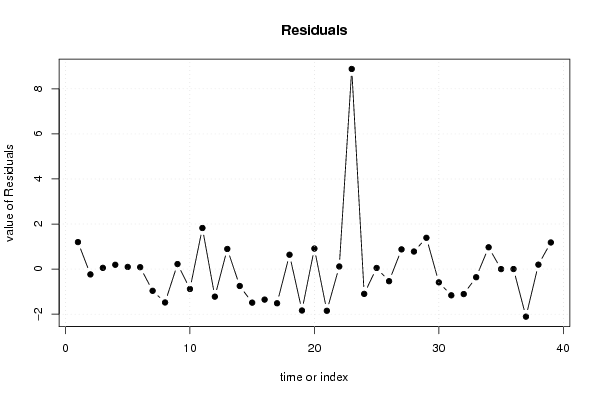

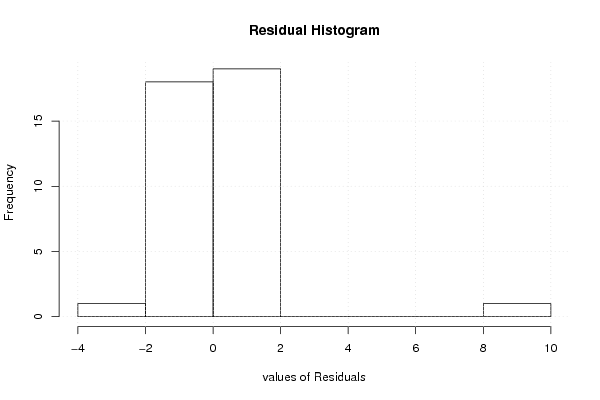

library(lmtest) n25 <- 25 #minimum number of obs. for Goldfeld-Quandt test par1 <- as.numeric(par1) x <- t(y) k <- length(x[1,]) n <- length(x[,1]) x1 <- cbind(x[,par1], x[,1:k!=par1]) mycolnames <- c(colnames(x)[par1], colnames(x)[1:k!=par1]) colnames(x1) <- mycolnames #colnames(x)[par1] x <- x1 if (par3 == 'First Differences'){ x2 <- array(0, dim=c(n-1,k), dimnames=list(1:(n-1), paste('(1-B)',colnames(x),sep=''))) for (i in 1:n-1) { for (j in 1:k) { x2[i,j] <- x[i+1,j] - x[i,j] } } x <- x2 } if (par2 == 'Include Monthly Dummies'){ x2 <- array(0, dim=c(n,11), dimnames=list(1:n, paste('M', seq(1:11), sep =''))) for (i in 1:11){ x2[seq(i,n,12),i] <- 1 } x <- cbind(x, x2) } if (par2 == 'Include Quarterly Dummies'){ x2 <- array(0, dim=c(n,3), dimnames=list(1:n, paste('Q', seq(1:3), sep =''))) for (i in 1:3){ x2[seq(i,n,4),i] <- 1 } x <- cbind(x, x2) } k <- length(x[1,]) if (par3 == 'Linear Trend'){ x <- cbind(x, c(1:n)) colnames(x)[k+1] <- 't' } x k <- length(x[1,]) df <- as.data.frame(x) (mylm <- lm(df)) (mysum <- summary(mylm)) if (n > n25) { kp3 <- k + 3 nmkm3 <- n - k - 3 gqarr <- array(NA, dim=c(nmkm3-kp3+1,3)) numgqtests <- 0 numsignificant1 <- 0 numsignificant5 <- 0 numsignificant10 <- 0 for (mypoint in kp3:nmkm3) { j <- 0 numgqtests <- numgqtests + 1 for (myalt in c('greater', 'two.sided', 'less')) { j <- j + 1 gqarr[mypoint-kp3+1,j] <- gqtest(mylm, point=mypoint, alternative=myalt)$p.value } if (gqarr[mypoint-kp3+1,2] < 0.01) numsignificant1 <- numsignificant1 + 1 if (gqarr[mypoint-kp3+1,2] < 0.05) numsignificant5 <- numsignificant5 + 1 if (gqarr[mypoint-kp3+1,2] < 0.10) numsignificant10 <- numsignificant10 + 1 } gqarr } bitmap(file='test0.png') plot(x[,1], type='l', main='Actuals and Interpolation', ylab='value of Actuals and Interpolation (dots)', xlab='time or index') points(x[,1]-mysum$resid) grid() dev.off() bitmap(file='test1.png') plot(mysum$resid, type='b', pch=19, main='Residuals', ylab='value of Residuals', xlab='time or index') grid() dev.off() bitmap(file='test2.png') hist(mysum$resid, main='Residual Histogram', xlab='values of Residuals') grid() dev.off() bitmap(file='test3.png') densityplot(~mysum$resid,col='black',main='Residual Density Plot', xlab='values of Residuals') dev.off() bitmap(file='test4.png') qqnorm(mysum$resid, main='Residual Normal Q-Q Plot') qqline(mysum$resid) grid() dev.off() (myerror <- as.ts(mysum$resid)) bitmap(file='test5.png') dum <- cbind(lag(myerror,k=1),myerror) dum dum1 <- dum[2:length(myerror),] dum1 z <- as.data.frame(dum1) z plot(z,main=paste('Residual Lag plot, lowess, and regression line'), ylab='values of Residuals', xlab='lagged values of Residuals') lines(lowess(z)) abline(lm(z)) grid() dev.off() bitmap(file='test6.png') acf(mysum$resid, lag.max=length(mysum$resid)/2, main='Residual Autocorrelation Function') grid() dev.off() bitmap(file='test7.png') pacf(mysum$resid, lag.max=length(mysum$resid)/2, main='Residual Partial Autocorrelation Function') grid() dev.off() bitmap(file='test8.png') opar <- par(mfrow = c(2,2), oma = c(0, 0, 1.1, 0)) plot(mylm, las = 1, sub='Residual Diagnostics') par(opar) dev.off() if (n > n25) { bitmap(file='test9.png') plot(kp3:nmkm3,gqarr[,2], main='Goldfeld-Quandt test',ylab='2-sided p-value',xlab='breakpoint') grid() dev.off() } load(file='createtable') a<-table.start() a<-table.row.start(a) a<-table.element(a, 'Multiple Linear Regression - Estimated Regression Equation', 1, TRUE) a<-table.row.end(a) myeq <- colnames(x)[1] myeq <- paste(myeq, '[t] = ', sep='') for (i in 1:k){ if (mysum$coefficients[i,1] > 0) myeq <- paste(myeq, '+', '') myeq <- paste(myeq, mysum$coefficients[i,1], sep=' ') if (rownames(mysum$coefficients)[i] != '(Intercept)') { myeq <- paste(myeq, rownames(mysum$coefficients)[i], sep='') if (rownames(mysum$coefficients)[i] != 't') myeq <- paste(myeq, '[t]', sep='') } } myeq <- paste(myeq, ' + e[t]') a<-table.row.start(a) a<-table.element(a, myeq) a<-table.row.end(a) a<-table.end(a) table.save(a,file='mytable1.tab') a<-table.start() a<-table.row.start(a) a<-table.element(a,hyperlink('http://www.xycoon.com/ols1.htm','Multiple Linear Regression - Ordinary Least Squares',''), 6, TRUE) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a,'Variable',header=TRUE) a<-table.element(a,'Parameter',header=TRUE) a<-table.element(a,'S.D.',header=TRUE) a<-table.element(a,'T-STAT<br />H0: parameter = 0',header=TRUE) a<-table.element(a,'2-tail p-value',header=TRUE) a<-table.element(a,'1-tail p-value',header=TRUE) a<-table.row.end(a) for (i in 1:k){ a<-table.row.start(a) a<-table.element(a,rownames(mysum$coefficients)[i],header=TRUE) a<-table.element(a,mysum$coefficients[i,1]) a<-table.element(a, round(mysum$coefficients[i,2],6)) a<-table.element(a, round(mysum$coefficients[i,3],4)) a<-table.element(a, round(mysum$coefficients[i,4],6)) a<-table.element(a, round(mysum$coefficients[i,4]/2,6)) a<-table.row.end(a) } a<-table.end(a) table.save(a,file='mytable2.tab') a<-table.start() a<-table.row.start(a) a<-table.element(a, 'Multiple Linear Regression - Regression Statistics', 2, TRUE) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a, 'Multiple R',1,TRUE) a<-table.element(a, sqrt(mysum$r.squared)) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a, 'R-squared',1,TRUE) a<-table.element(a, mysum$r.squared) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a, 'Adjusted R-squared',1,TRUE) a<-table.element(a, mysum$adj.r.squared) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a, 'F-TEST (value)',1,TRUE) a<-table.element(a, mysum$fstatistic[1]) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a, 'F-TEST (DF numerator)',1,TRUE) a<-table.element(a, mysum$fstatistic[2]) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a, 'F-TEST (DF denominator)',1,TRUE) a<-table.element(a, mysum$fstatistic[3]) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a, 'p-value',1,TRUE) a<-table.element(a, 1-pf(mysum$fstatistic[1],mysum$fstatistic[2],mysum$fstatistic[3])) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a, 'Multiple Linear Regression - Residual Statistics', 2, TRUE) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a, 'Residual Standard Deviation',1,TRUE) a<-table.element(a, mysum$sigma) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a, 'Sum Squared Residuals',1,TRUE) a<-table.element(a, sum(myerror*myerror)) a<-table.row.end(a) a<-table.end(a) table.save(a,file='mytable3.tab') a<-table.start() a<-table.row.start(a) a<-table.element(a, 'Multiple Linear Regression - Actuals, Interpolation, and Residuals', 4, TRUE) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a, 'Time or Index', 1, TRUE) a<-table.element(a, 'Actuals', 1, TRUE) a<-table.element(a, 'Interpolation<br />Forecast', 1, TRUE) a<-table.element(a, 'Residuals<br />Prediction Error', 1, TRUE) a<-table.row.end(a) for (i in 1:n) { a<-table.row.start(a) a<-table.element(a,i, 1, TRUE) a<-table.element(a,x[i]) a<-table.element(a,x[i]-mysum$resid[i]) a<-table.element(a,mysum$resid[i]) a<-table.row.end(a) } a<-table.end(a) table.save(a,file='mytable4.tab') if (n > n25) { a<-table.start() a<-table.row.start(a) a<-table.element(a,'Goldfeld-Quandt test for Heteroskedasticity',4,TRUE) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a,'p-values',header=TRUE) a<-table.element(a,'Alternative Hypothesis',3,header=TRUE) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a,'breakpoint index',header=TRUE) a<-table.element(a,'greater',header=TRUE) a<-table.element(a,'2-sided',header=TRUE) a<-table.element(a,'less',header=TRUE) a<-table.row.end(a) for (mypoint in kp3:nmkm3) { a<-table.row.start(a) a<-table.element(a,mypoint,header=TRUE) a<-table.element(a,gqarr[mypoint-kp3+1,1]) a<-table.element(a,gqarr[mypoint-kp3+1,2]) a<-table.element(a,gqarr[mypoint-kp3+1,3]) a<-table.row.end(a) } a<-table.end(a) table.save(a,file='mytable5.tab') a<-table.start() a<-table.row.start(a) a<-table.element(a,'Meta Analysis of Goldfeld-Quandt test for Heteroskedasticity',4,TRUE) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a,'Description',header=TRUE) a<-table.element(a,'# significant tests',header=TRUE) a<-table.element(a,'% significant tests',header=TRUE) a<-table.element(a,'OK/NOK',header=TRUE) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a,'1% type I error level',header=TRUE) a<-table.element(a,numsignificant1) a<-table.element(a,numsignificant1/numgqtests) if (numsignificant1/numgqtests < 0.01) dum <- 'OK' else dum <- 'NOK' a<-table.element(a,dum) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a,'5% type I error level',header=TRUE) a<-table.element(a,numsignificant5) a<-table.element(a,numsignificant5/numgqtests) if (numsignificant5/numgqtests < 0.05) dum <- 'OK' else dum <- 'NOK' a<-table.element(a,dum) a<-table.row.end(a) a<-table.row.start(a) a<-table.element(a,'10% type I error level',header=TRUE) a<-table.element(a,numsignificant10) a<-table.element(a,numsignificant10/numgqtests) if (numsignificant10/numgqtests < 0.1) dum <- 'OK' else dum <- 'NOK' a<-table.element(a,dum) a<-table.row.end(a) a<-table.end(a) table.save(a,file='mytable6.tab') } | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Copyright

This work is licensed under a

Creative Commons Attribution-Noncommercial-Share Alike 3.0 License.

Software written by Ed van Stee & Patrick Wessa

Disclaimer

Information provided on this web site is provided "AS IS" without warranty of any kind, either express or implied, including, without limitation, warranties of merchantability, fitness for a particular purpose, and noninfringement. We use reasonable efforts to include accurate and timely information and periodically update the information, and software without notice. However, we make no warranties or representations as to the accuracy or completeness of such information (or software), and we assume no liability or responsibility for errors or omissions in the content of this web site, or any software bugs in online applications. Your use of this web site is AT YOUR OWN RISK. Under no circumstances and under no legal theory shall we be liable to you or any other person for any direct, indirect, special, incidental, exemplary, or consequential damages arising from your access to, or use of, this web site.

Privacy Policy

We may request personal information to be submitted to our servers in order to be able to:

- personalize online software applications according to your needs

- enforce strict security rules with respect to the data that you upload (e.g. statistical data)

- manage user sessions of online applications

- alert you about important changes or upgrades in resources or applications

We NEVER allow other companies to directly offer registered users information about their products and services. Banner references and hyperlinks of third parties NEVER contain any personal data of the visitor.

We do NOT sell, nor transmit by any means, personal information, nor statistical data series uploaded by you to third parties.

We carefully protect your data from loss, misuse, alteration,

and destruction. However, at any time, and under any circumstance you

are solely responsible for managing your passwords, and keeping them

secret.

We store a unique ANONYMOUS USER ID in the form of a small 'Cookie' on your computer. This allows us to track your progress when using this website which is necessary to create state-dependent features. The cookie is used for NO OTHER PURPOSE. At any time you may opt to disallow cookies from this website - this will not affect other features of this website.

We examine cookies that are used by third-parties (banner and online ads) very closely: abuse from third-parties automatically results in termination of the advertising contract without refund. We have very good reason to believe that the cookies that are produced by third parties (banner ads) do NOT cause any privacy or security risk.

FreeStatistics.org is safe. There is no need to download any software to use the applications and services contained in this website. Hence, your system's security is not compromised by their use, and your personal data - other than data you submit in the account application form, and the user-agent information that is transmitted by your browser - is never transmitted to our servers.

As a general rule, we do not log on-line behavior of individuals (other than normal logging of webserver 'hits'). However, in cases of abuse, hacking, unauthorized access, Denial of Service attacks, illegal copying, hotlinking, non-compliance with international webstandards (such as robots.txt), or any other harmful behavior, our system engineers are empowered to log, track, identify, publish, and ban misbehaving individuals - even if this leads to ban entire blocks of IP addresses, or disclosing user's identity.