Free Statistics

of Irreproducible Research!

Description of Statistical Computation | |||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Author's title | |||||||||||||||||||||||||||||||||||||||||||||

| Author | *The author of this computation has been verified* | ||||||||||||||||||||||||||||||||||||||||||||

| R Software Module | rwasp_regression_trees1.wasp | ||||||||||||||||||||||||||||||||||||||||||||

| Title produced by software | Recursive Partitioning (Regression Trees) | ||||||||||||||||||||||||||||||||||||||||||||

| Date of computation | Sun, 11 Dec 2011 10:20:26 -0500 | ||||||||||||||||||||||||||||||||||||||||||||

| Cite this page as follows | Statistical Computations at FreeStatistics.org, Office for Research Development and Education, URL https://freestatistics.org/blog/index.php?v=date/2011/Dec/11/t1323616870mcr3n4kxvx47ju5.htm/, Retrieved Sun, 28 Apr 2024 22:33:11 +0000 | ||||||||||||||||||||||||||||||||||||||||||||

| Statistical Computations at FreeStatistics.org, Office for Research Development and Education, URL https://freestatistics.org/blog/index.php?pk=153803, Retrieved Sun, 28 Apr 2024 22:33:11 +0000 | |||||||||||||||||||||||||||||||||||||||||||||

| QR Codes: | |||||||||||||||||||||||||||||||||||||||||||||

|

| |||||||||||||||||||||||||||||||||||||||||||||

| Original text written by user: | |||||||||||||||||||||||||||||||||||||||||||||

| IsPrivate? | No (this computation is public) | ||||||||||||||||||||||||||||||||||||||||||||

| User-defined keywords | |||||||||||||||||||||||||||||||||||||||||||||

| Estimated Impact | 105 | ||||||||||||||||||||||||||||||||||||||||||||

Tree of Dependent Computations | |||||||||||||||||||||||||||||||||||||||||||||

| Family? (F = Feedback message, R = changed R code, M = changed R Module, P = changed Parameters, D = changed Data) | |||||||||||||||||||||||||||||||||||||||||||||

| - [Kendall tau Correlation Matrix] [] [2010-12-05 17:44:33] [b98453cac15ba1066b407e146608df68] - RMPD [Kendall tau Correlation Matrix] [WS10: pearson] [2011-12-11 13:35:32] [17977ad44e8eb3a4dcd5a9173c81cab3] - RMP [Multiple Regression] [WS10: MR] [2011-12-11 14:26:04] [17977ad44e8eb3a4dcd5a9173c81cab3] - RM [Recursive Partitioning (Regression Trees)] [WS10: RP2] [2011-12-11 15:20:26] [dfccbb29b87008a80f95a64515f2b3fe] [Current] - R P [Recursive Partitioning (Regression Trees)] [] [2011-12-22 14:27:33] [3931071255a6f7f4a767409781cc5f7d] - RMPD [Kendall tau Correlation Matrix] [pearson] [2011-12-22 16:25:44] [141ef847e2c5f8e947fe4eabcb0cf143] - RMPD [Kendall tau Correlation Matrix] [Pearson] [2011-12-22 16:31:12] [141ef847e2c5f8e947fe4eabcb0cf143] - RMPD [Kendall tau Correlation Matrix] [Kendall] [2011-12-22 16:47:26] [141ef847e2c5f8e947fe4eabcb0cf143] - R PD [Recursive Partitioning (Regression Trees)] [regression trees] [2011-12-22 17:19:01] [141ef847e2c5f8e947fe4eabcb0cf143] - [Recursive Partitioning (Regression Trees)] [categorie] [2011-12-22 18:35:16] [141ef847e2c5f8e947fe4eabcb0cf143] - [Recursive Partitioning (Regression Trees)] [cross validation] [2011-12-22 19:04:08] [141ef847e2c5f8e947fe4eabcb0cf143] | |||||||||||||||||||||||||||||||||||||||||||||

| Feedback Forum | |||||||||||||||||||||||||||||||||||||||||||||

Post a new message | |||||||||||||||||||||||||||||||||||||||||||||

Dataset | |||||||||||||||||||||||||||||||||||||||||||||

| Dataseries X: | |||||||||||||||||||||||||||||||||||||||||||||

1418 210907 56 3 79 30 112285 869 120982 56 4 58 28 84786 1530 176508 54 12 60 38 83123 2172 179321 89 2 108 30 101193 901 123185 40 1 49 22 38361 463 52746 25 3 0 26 68504 3201 385534 92 0 121 25 119182 371 33170 18 0 1 18 22807 1192 101645 63 0 20 11 17140 1583 149061 44 5 43 26 116174 1439 165446 33 0 69 25 57635 1764 237213 84 0 78 38 66198 1495 173326 88 7 86 44 71701 1373 133131 55 7 44 30 57793 2187 258873 60 3 104 40 80444 1491 180083 66 9 63 34 53855 4041 324799 154 0 158 47 97668 1706 230964 53 4 102 30 133824 2152 236785 119 3 77 31 101481 1036 135473 41 0 82 23 99645 1882 202925 61 7 115 36 114789 1929 215147 58 0 101 36 99052 2242 344297 75 1 80 30 67654 1220 153935 33 5 50 25 65553 1289 132943 40 7 83 39 97500 2515 174724 92 0 123 34 69112 2147 174415 100 0 73 31 82753 2352 225548 112 5 81 31 85323 1638 223632 73 0 105 33 72654 1222 124817 40 0 47 25 30727 1812 221698 45 0 105 33 77873 1677 210767 60 3 94 35 117478 1579 170266 62 4 44 42 74007 1731 260561 75 1 114 43 90183 807 84853 31 4 38 30 61542 2452 294424 77 2 107 33 101494 829 101011 34 0 30 13 27570 1940 215641 46 0 71 32 55813 2662 325107 99 0 84 36 79215 186 7176 17 0 0 0 1423 1499 167542 66 2 59 28 55461 865 106408 30 1 33 14 31081 1793 96560 76 0 42 17 22996 2527 265769 146 2 96 32 83122 2747 269651 67 10 106 30 70106 1324 149112 56 6 56 35 60578 2702 175824 107 0 57 20 39992 1383 152871 58 5 59 28 79892 1179 111665 34 4 39 28 49810 2099 116408 61 1 34 39 71570 4308 362301 119 2 76 34 100708 918 78800 42 2 20 26 33032 1831 183167 66 0 91 39 82875 3373 277965 89 8 115 39 139077 1713 150629 44 3 85 33 71595 1438 168809 66 0 76 28 72260 496 24188 24 0 8 4 5950 2253 329267 259 8 79 39 115762 744 65029 17 5 21 18 32551 1161 101097 64 3 30 14 31701 2352 218946 41 1 76 29 80670 2144 244052 68 5 101 44 143558 4691 341570 168 1 94 21 117105 1112 103597 43 1 27 16 23789 2694 233328 132 5 92 28 120733 1973 256462 105 0 123 35 105195 1769 206161 71 12 75 28 73107 3148 311473 112 8 128 38 132068 2474 235800 94 8 105 23 149193 2084 177939 82 8 55 36 46821 1954 207176 70 8 56 32 87011 1226 196553 57 2 41 29 95260 1389 174184 53 0 72 25 55183 1496 143246 103 5 67 27 106671 2269 187559 121 8 75 36 73511 1833 187681 62 2 114 28 92945 1268 119016 52 5 118 23 78664 1943 182192 52 12 77 40 70054 893 73566 32 6 22 23 22618 1762 194979 62 7 66 40 74011 1403 167488 45 2 69 28 83737 1425 143756 46 0 105 34 69094 1857 275541 63 4 116 33 93133 1840 243199 75 3 88 28 95536 1502 182999 88 6 73 34 225920 1441 135649 46 2 99 30 62133 1420 152299 53 0 62 33 61370 1416 120221 37 1 53 22 43836 2970 346485 90 0 118 38 106117 1317 145790 63 5 30 26 38692 1644 193339 78 2 100 35 84651 870 80953 25 0 49 8 56622 1654 122774 45 0 24 24 15986 1054 130585 46 5 67 29 95364 937 112611 41 0 46 20 26706 3004 286468 144 1 57 29 89691 2008 241066 82 0 75 45 67267 2547 148446 91 1 135 37 126846 1885 204713 71 1 68 33 41140 1626 182079 63 2 124 33 102860 1468 140344 53 6 33 25 51715 2445 220516 62 1 98 32 55801 1964 243060 63 4 58 29 111813 1381 162765 32 2 68 28 120293 1369 182613 39 3 81 28 138599 1659 232138 62 0 131 31 161647 2888 265318 117 10 110 52 115929 1290 85574 34 0 37 21 24266 2845 310839 92 9 130 24 162901 1982 225060 93 7 93 41 109825 1904 232317 54 0 118 33 129838 1391 144966 144 0 39 32 37510 602 43287 14 4 13 19 43750 1743 155754 61 4 74 20 40652 1559 164709 109 0 81 31 87771 2014 201940 38 0 109 31 85872 2143 235454 73 0 151 32 89275 2146 220801 75 1 51 18 44418 874 99466 50 0 28 23 192565 1590 92661 61 1 40 17 35232 1590 133328 55 0 56 20 40909 1210 61361 77 0 27 12 13294 2072 125930 75 4 37 17 32387 1281 100750 72 0 83 30 140867 1401 224549 50 4 54 31 120662 834 82316 32 4 27 10 21233 1105 102010 53 3 28 13 44332 1272 101523 42 0 59 22 61056 1944 243511 71 0 133 42 101338 391 22938 10 0 12 1 1168 761 41566 35 5 0 9 13497 1605 152474 65 0 106 32 65567 530 61857 25 4 23 11 25162 1988 99923 66 0 44 25 32334 1386 132487 41 0 71 36 40735 2395 317394 86 1 116 31 91413 387 21054 16 0 4 0 855 1742 209641 42 5 62 24 97068 620 22648 19 0 12 13 44339 449 31414 19 0 18 8 14116 800 46698 45 0 14 13 10288 1684 131698 65 0 60 19 65622 1050 91735 35 0 7 18 16563 2699 244749 95 2 98 33 76643 1606 184510 49 7 64 40 110681 1502 79863 37 1 29 22 29011 1204 128423 64 8 32 38 92696 1138 97839 38 2 25 24 94785 568 38214 34 0 16 8 8773 1459 151101 32 2 48 35 83209 2158 272458 65 0 100 43 93815 1111 172494 52 0 46 43 86687 1421 108043 62 1 45 14 34553 2833 328107 65 3 129 41 105547 1955 250579 83 0 130 38 103487 2922 351067 95 3 136 45 213688 1002 158015 29 0 59 31 71220 1060 98866 18 0 25 13 23517 956 85439 33 0 32 28 56926 2186 229242 247 4 63 31 91721 3604 351619 139 4 95 40 115168 1035 84207 29 11 14 30 111194 1417 120445 118 0 36 16 51009 3261 324598 110 0 113 37 135777 1587 131069 67 4 47 30 51513 1424 204271 42 0 92 35 74163 1701 165543 65 1 70 32 51633 1249 141722 94 0 19 27 75345 946 116048 64 0 50 20 33416 1926 250047 81 0 41 18 83305 3352 299775 95 9 91 31 98952 1641 195838 67 1 111 31 102372 2035 173260 63 3 41 21 37238 2312 254488 83 10 120 39 103772 1369 104389 45 5 135 41 123969 1577 136084 30 0 27 13 27142 2201 199476 70 2 87 32 135400 961 92499 32 0 25 18 21399 1900 224330 83 1 131 39 130115 1254 135781 31 2 45 14 24874 1335 74408 67 4 29 7 34988 1597 81240 66 0 58 17 45549 207 14688 10 0 4 0 6023 1645 181633 70 2 47 30 64466 2429 271856 103 1 109 37 54990 151 7199 5 0 7 0 1644 474 46660 20 0 12 5 6179 141 17547 5 0 0 1 3926 1639 133368 36 1 37 16 32755 872 95227 34 0 37 32 34777 1318 152601 48 2 46 24 73224 1018 98146 40 0 15 17 27114 1383 79619 43 3 42 11 20760 1314 59194 31 6 7 24 37636 1335 139942 42 0 54 22 65461 1403 118612 46 2 54 12 30080 910 72880 33 0 14 19 24094 616 65475 18 2 16 13 69008 1407 99643 55 1 33 17 54968 771 71965 35 1 32 15 46090 766 77272 59 2 21 16 27507 473 49289 19 1 15 24 10672 1376 135131 66 0 38 15 34029 1232 108446 60 1 22 17 46300 1521 89746 36 3 28 18 24760 572 44296 25 0 10 20 18779 1059 77648 47 0 31 16 21280 1544 181528 54 0 32 16 40662 1230 134019 53 0 32 18 28987 1206 124064 40 1 43 22 22827 1205 92630 40 4 27 8 18513 1255 121848 39 0 37 17 30594 613 52915 14 0 20 18 24006 721 81872 45 0 32 16 27913 1109 58981 36 7 0 23 42744 740 53515 28 2 5 22 12934 1126 60812 44 0 26 13 22574 728 56375 30 7 10 13 41385 689 65490 22 3 27 16 18653 592 80949 17 0 11 16 18472 995 76302 31 0 29 20 30976 1613 104011 55 6 25 22 63339 2048 98104 54 2 55 17 25568 705 67989 21 0 23 18 33747 301 30989 14 0 5 17 4154 1803 135458 81 3 43 12 19474 799 73504 35 0 23 7 35130 861 63123 43 1 34 17 39067 1186 61254 46 1 36 14 13310 1451 74914 30 0 35 23 65892 628 31774 23 1 0 17 4143 1161 81437 38 0 37 14 28579 1463 87186 54 0 28 15 51776 742 50090 20 0 16 17 21152 979 65745 53 0 26 21 38084 675 56653 45 0 38 18 27717 1241 158399 39 0 23 18 32928 676 46455 20 0 22 17 11342 1049 73624 24 0 30 17 19499 620 38395 31 0 16 16 16380 1081 91899 35 0 18 15 36874 1688 139526 151 0 28 21 48259 736 52164 52 0 32 16 16734 617 51567 30 2 21 14 28207 812 70551 31 0 23 15 30143 1051 84856 29 1 29 17 41369 1656 102538 57 1 50 15 45833 705 86678 40 0 12 15 29156 945 85709 44 0 21 10 35944 554 34662 25 0 18 6 36278 1597 150580 77 0 27 22 45588 982 99611 35 0 41 21 45097 222 19349 11 0 13 1 3895 1212 99373 63 1 12 18 28394 1143 86230 44 0 21 17 18632 435 30837 19 0 8 4 2325 532 31706 13 0 26 10 25139 882 89806 42 0 27 16 27975 608 62088 38 1 13 16 14483 459 40151 29 0 16 9 13127 578 27634 20 0 2 16 5839 826 76990 27 0 42 17 24069 509 37460 20 0 5 7 3738 717 54157 19 0 37 15 18625 637 49862 37 0 17 14 36341 857 84337 26 0 38 14 24548 830 64175 42 0 37 18 21792 652 59382 49 0 29 12 26263 707 119308 30 0 32 16 23686 954 76702 49 0 35 21 49303 1461 103425 67 1 17 19 25659 672 70344 28 0 20 16 28904 778 43410 19 0 7 1 2781 1141 104838 49 1 46 16 29236 680 62215 27 0 24 10 19546 1090 69304 30 6 40 19 22818 616 53117 22 3 3 12 32689 285 19764 12 1 10 2 5752 1145 86680 31 2 37 14 22197 733 84105 20 0 17 17 20055 888 77945 20 0 28 19 25272 849 89113 39 0 19 14 82206 1182 91005 29 3 29 11 32073 528 40248 16 1 8 4 5444 642 64187 27 0 10 16 20154 947 50857 21 0 15 20 36944 819 56613 19 1 15 12 8019 757 62792 35 0 28 15 30884 894 72535 14 0 17 16 19540 | |||||||||||||||||||||||||||||||||||||||||||||

Tables (Output of Computation) | |||||||||||||||||||||||||||||||||||||||||||||

| |||||||||||||||||||||||||||||||||||||||||||||

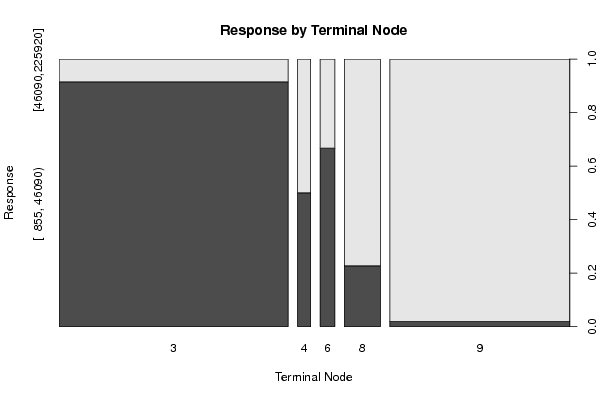

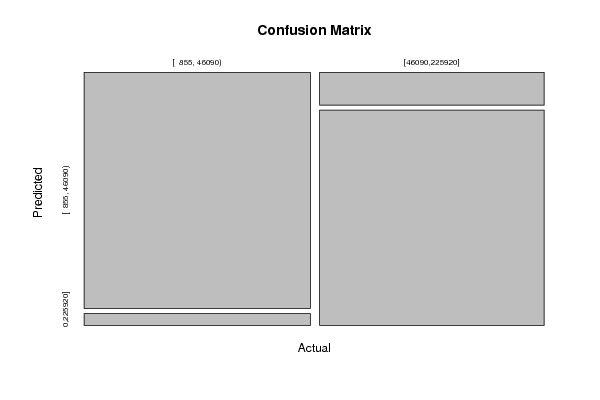

Figures (Output of Computation) | |||||||||||||||||||||||||||||||||||||||||||||

Input Parameters & R Code | |||||||||||||||||||||||||||||||||||||||||||||

| Parameters (Session): | |||||||||||||||||||||||||||||||||||||||||||||

| par1 = kendall ; | |||||||||||||||||||||||||||||||||||||||||||||

| Parameters (R input): | |||||||||||||||||||||||||||||||||||||||||||||

| par1 = 7 ; par2 = quantiles ; par3 = 2 ; par4 = no ; | |||||||||||||||||||||||||||||||||||||||||||||

| R code (references can be found in the software module): | |||||||||||||||||||||||||||||||||||||||||||||

library(party) | |||||||||||||||||||||||||||||||||||||||||||||