par1 <- as.numeric(par1)

(n <- length(x))

(np <- floor(n / par1))

arr <- array(NA,dim=c(par1,np))

j <- 0

k <- 1

for (i in 1:(np*par1))

{

j = j + 1

arr[j,k] <- x[i]

if (j == par1) {

j = 0

k=k+1

}

}

arr

arr.mean <- array(NA,dim=np)

arr.sd <- array(NA,dim=np)

arr.range <- array(NA,dim=np)

for (j in 1:np)

{

arr.mean[j] <- mean(arr[,j],na.rm=TRUE)

arr.sd[j] <- sd(arr[,j],na.rm=TRUE)

arr.range[j] <- max(arr[,j],na.rm=TRUE) - min(arr[,j],na.rm=TRUE)

}

arr.mean

arr.sd

arr.range

(lm1 <- lm(arr.sd~arr.mean))

(lnlm1 <- lm(log(arr.sd)~log(arr.mean)))

(lm2 <- lm(arr.range~arr.mean))

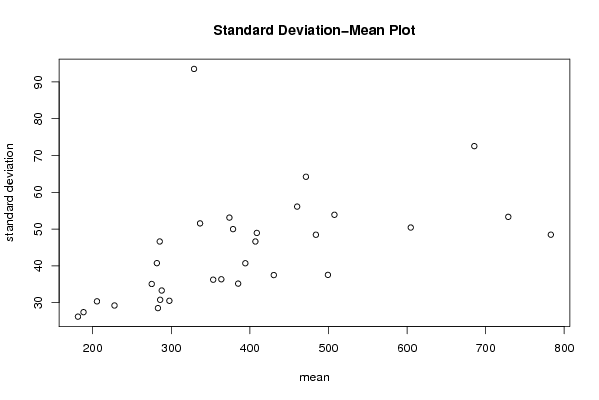

bitmap(file='test1.png')

plot(arr.mean,arr.sd,main='Standard Deviation-Mean Plot',xlab='mean',ylab='standard deviation')

dev.off()

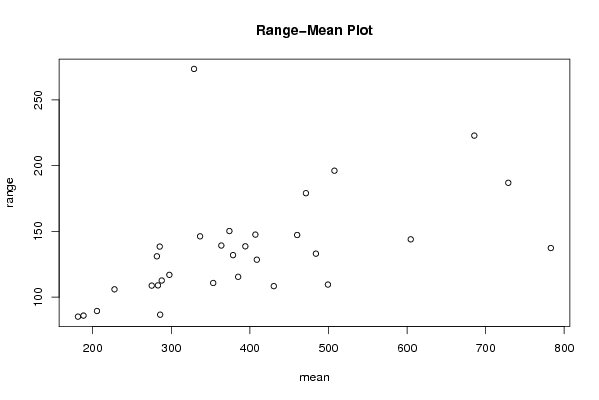

bitmap(file='test2.png')

plot(arr.mean,arr.range,main='Range-Mean Plot',xlab='mean',ylab='range')

dev.off()

load(file='createtable')

a<-table.start()

a<-table.row.start(a)

a<-table.element(a,'Standard Deviation-Mean Plot',4,TRUE)

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'Section',header=TRUE)

a<-table.element(a,'Mean',header=TRUE)

a<-table.element(a,'Standard Deviation',header=TRUE)

a<-table.element(a,'Range',header=TRUE)

a<-table.row.end(a)

for (j in 1:np) {

a<-table.row.start(a)

a<-table.element(a,j,header=TRUE)

a<-table.element(a,arr.mean[j])

a<-table.element(a,arr.sd[j] )

a<-table.element(a,arr.range[j] )

a<-table.row.end(a)

}

a<-table.end(a)

table.save(a,file='mytable.tab')

a<-table.start()

a<-table.row.start(a)

a<-table.element(a,'Regression: S.E.(k) = alpha + beta * Mean(k)',2,TRUE)

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'alpha',header=TRUE)

a<-table.element(a,lm1$coefficients[[1]])

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'beta',header=TRUE)

a<-table.element(a,lm1$coefficients[[2]])

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'S.D.',header=TRUE)

a<-table.element(a,summary(lm1)$coefficients[2,2])

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'T-STAT',header=TRUE)

a<-table.element(a,summary(lm1)$coefficients[2,3])

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'p-value',header=TRUE)

a<-table.element(a,summary(lm1)$coefficients[2,4])

a<-table.row.end(a)

a<-table.end(a)

table.save(a,file='mytable1.tab')

a<-table.start()

a<-table.row.start(a)

a<-table.element(a,'Regression: ln S.E.(k) = alpha + beta * ln Mean(k)',2,TRUE)

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'alpha',header=TRUE)

a<-table.element(a,lnlm1$coefficients[[1]])

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'beta',header=TRUE)

a<-table.element(a,lnlm1$coefficients[[2]])

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'S.D.',header=TRUE)

a<-table.element(a,summary(lnlm1)$coefficients[2,2])

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'T-STAT',header=TRUE)

a<-table.element(a,summary(lnlm1)$coefficients[2,3])

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'p-value',header=TRUE)

a<-table.element(a,summary(lnlm1)$coefficients[2,4])

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'Lambda',header=TRUE)

a<-table.element(a,1-lnlm1$coefficients[[2]])

a<-table.row.end(a)

a<-table.end(a)

table.save(a,file='mytable2.tab')

|